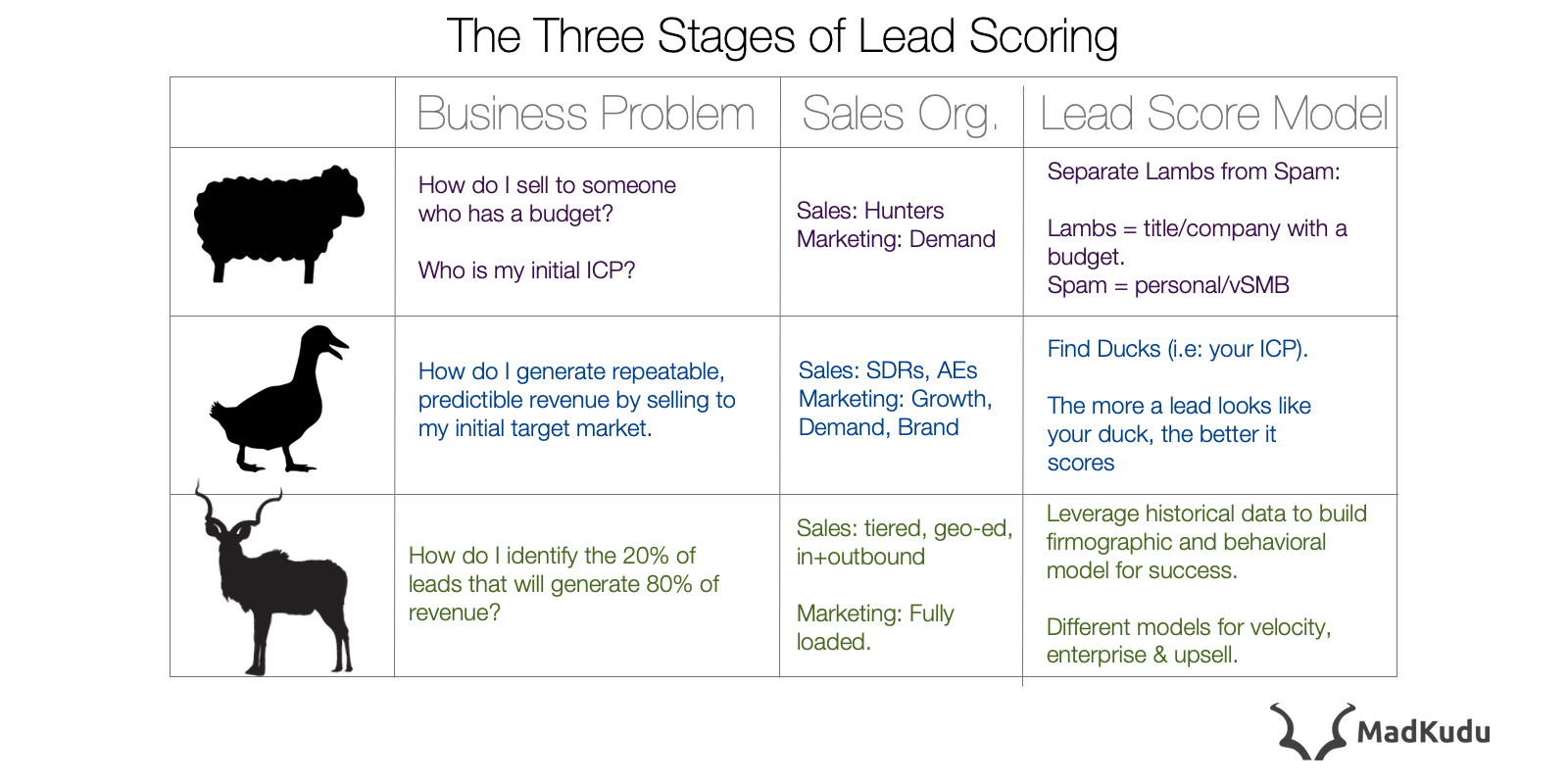

Lambs, Kudus and Ducks Score: The Three Stages of Lead Scoring

In the past year, we talked with hundreds of SaaS companies about their marketing technology infrastructure. While we're always excited when a great company comes to us looking to leverage MadKudu in their customer journey, we've noticed two very common scenarios when it comes to marketing technology. We have seen (1) companies looking to put complicated systems in place too early, and (2) companies that are very advanced in their sales organization that are impeding their own growth with a basic/limiting MarTech stack. I want to spend some time diving into how we see lead scoring evolving as companies scale. It is only natural that the infrastructure that helped you get from 0-1 be reimagined to go from 1-100, and again from 100-1000 - and so on. Scaling up technical infrastructure is more than spinning up new machines - it’s sharding and changing the way you process data, distributing duplicate data across multiple machines to assure worldwide performance. Likewise scaling up sales infrastructure is more than hiring more SDRs - it’s segmenting your sales team by account value (SMB, MM, Ent.), territory (US West, US East, Europe, etc.), and stage of the sales process (I/O SDR, AE, Implementation, Customer Success). Our marketing infrastructure invariably evolves - the tiny tools that we scraped together to get from 0-1 won’t support a 15-person team working solely on SEO, and your favorite free MAP isn’t robust enough to handle complex marketing campaigns and attribution. We add in new tools & methods, and we factor in new calculations like revenue attributions, sales velocity & buyer persona, often requiring transitioning to more robust platforms. With that in mind, let's talk about Lead Scores.

Lead Score (/lēd skôr/) noun.

A lead score is a quantification of the quality of a lead.

Companies at all stages use lead scoring because a lead score’s fundamental purpose never changes. A lead score is a quantification of the quality of a lead. The fundamental output never really changes: the higher the score, the more quality the lead. How we calculate a lead score evolves as a company hits various stages of development.

Stage 1: “Spam or Lamb?” - Ditch the Spam, and Hunt the Lamb.

Early on, your sales team is still learning how to sell, who to sell to, and what to sell. Sales books advise hiring "hunters" early on, who will thrive off of the challenge of wading through the unknowns to close a deal. Any filtering based on who you think is a good fit may push out people you should be talking to. Marketing needs to provide hunters with tasty lambs they can go after, and you want them to waste as little time on Spam as possible (a hungry hunter is an angry hunter). Your lead score is binary: it’s too early to tell a good lamb from a bad lamb, so marketing serves up as many lamps as possible to hunters so they can hunt efficiently. Stepping back from my belabored metaphor for a second, marketing needs to enable sales to follow-up quickly with fresh, high-quality leads. You want to rule out missing out on deals because you were talking to bad leads first so that you begin to build a track record of what your ideal customer profile looks like.

Lambs vs. Spam

Distinguishing between Lambs (good leads) & Spam (bad leads) will be largely based on firmographic data about the individual and the company. A Lamb is going to be either a company with a budget or a title with a budget: the bigger the company (more employees), or the bigger the title (director, VP, CXO), the more budget they are going to have to spend. Spam, meanwhile, will be visible by its lack of information, either because there is none or because it's not worth sharing. At the individual level, a personal or student email will indicate Spam (hunters don't have time for non-businesses), as will a vSMB (very small business). While your product may target vSMBs, they often use personal emails for work anyway (e.g: DavesPaintingCo@hotmail.com) and when they do pay, they just want to put in a credit card (not worth sales' time). Depending on the size of the funnel and the product-market fit, this style of the lead score should cover you up until your first 5 SDRs, your first 50 employees, until you pass 100 qualified leads per day or up until $1 Million ARR.

Stage 2: “If it looks like a Duck” - point-based scoring.

Those lambs you hunted for 12-18 months helped inform what type of leads you’re going after, and your lead score will now need to prioritize leads which most look like your ideal customer profile (ICP): I call this “if it looks like a Duck.” Your Duck might look something like (A)Product Managers at (B)VC-backed, (C)US-based (D)software businesses with (E)50-200 employees. Here our duck has five properties:

- (A) Persona = Product Managers

- (B) Companies that have raised venture capital

- (C) Companies based in the United States

- (D) Companies that sell software

- (E) Companies with 50-200 employees

Your lead score is going to be a weighted function of each of these variables. Is it critical they be venture-backed, or can you sell to self-funded software businesses with 75 employees as well? Is it a deal-breaker if they’re based in Canada or the U.K.? Your lead score will end up looking something like this:

f(Duck) = An₁ + Bn₂ + Cn₃ + Dn₄ +En₅

Here n₁…₅ are defined based on the importance of each attribute. Lead’s that look 100% like your ICP will score the highest, while good & medium-scoring leads should get lower prioritization but still be routed to sales as long as they meet at least 1 of your critical attributes and 1-2 other attributes. You can analyze how good your lead score was at predicting revenue on a quarterly basis by looking at false positives & false negatives. This lead score model will last you for a while with minor tweaks and adjustments; however, one of a number of things will eventually happen that will make your model no longer effective:

Complex Sales Organization

A complex sales organization comes from having a nonlinear sales process (i.e: "they look like our ICP, so they should talk to sales"). Here are a few examples (although not exhaustive): You may begin selling to different market segments with a tiered sales team: your point-based lead scoring system only works for one market segment, or you’ll have to adjust attributes to continually adapt as you tier your sales team, instead of adapting to their needs for increased sales velocity. You may begin upselling at scale: a good lead for upselling is based not on their firmographic profile but on their behavioral profile: point-based behavioral attributes won’t work for new leads and the score is often a result of aggregate behavior across multiple users & accounts, too complex to map to a point-based lead score model (this is often called Marketing Qualified Accounts). You may begin to find that a majority of your revenue is coming from outside your ICP, no matter how you weigh the various attributes. If you only accept leads that fit your ICP, you won’t hit your growth goals. Great leads are coming in that look nothing like what you expected but you’re still closing deals with him. Your ICP isn’t wrong, but your lead score model needs to change. We've written about this in-depth here. When that happens, you’ll need to move away from manual management of linear model to a more sophisticated model, one that adapts to the complex habitat in which your company now operates and wins by being smarter.

Stage 3: “Be like a Kudu” - Adapt to your surroundings

As your go-to-market strategy pans out and you begin to take market share across multiple industries/geos/company sizes, the role of your lead score will stay the same: fundamentally, it should provide a quantitative evaluation of the quality of each lead. Different type of leads are going to require different types of firmographic & behavioral data:

- Existing customers: product usage data, account-level firmographic data

- SMB (velocity) leads: account-level firmographic data.

- Enterprise leads: individual-level firmographic data across multiple individuals, analyzed at the account level.

Your model should adapt to each situation, ingest multiple forms of data, and contextually understand whether a lead is a good fit for any of your products or sales teams. As your product evolves to accommodate more use cases, your lead scoring model needs to evolve regularly, ingesting in the most recent data and refreshing accordingly.

Predictive Lead Scoring

Predictive lead scoring adapts to the needs of growth stage b2b businesses because the model is designed to predict a lead’s likelihood to convert based on historical data, removing the need to manually qualify leads against your ICP. Predictive Lead Scoring models are like Kudus: they are lightning-fast (did you Kudus can run 70km/h?) and constantly adapt to the changing environment. Kudus are active 24/7 (they never sleep), and their distinct coloration is the result of evolving to adapt to their surroundings & predators. The advantage of a predictive lead scoring model is that the end result remains simple - good vs. bad, 0 vs. 100 - regardless of how complex the inputs get - self-serve or enterprise, account-based scoring, etc. Operationalizing a predictive lead scoring model can be time-intensive: ingesting new data sources as the rest of your company infrastructure evolves and takes on more tools with data your model needs, refreshing the model regularly, and maintaining marketing & sales alignment. Making the switch to a predictive lead scoring model only truly makes sense when your sales organization has reached a level of complexity that requires it to sustain repeatable growth.

“Where should my business be?”

Now that we've looked at how lead scoring models evolve as your marketing & sales organization grows, let's come back to our initial conversation about what type of model you need for your current business problems. As businesses scale, some buy a tank when a bicycle will do, while others a trying to make a horse go as fast as a rocket. We’ve put together a quick benchmark to assess the state of your go-to-market strategy and where your lead scoring model should be at.

Some companies can stick with a Lamb lead scoring model up through 50 employees, while others need a predictive lead scoring model at 75 employees. While there are some clear limiting factors like sales organization complexity and plentiful historical data, understanding the core business problem you're trying to solve (in order to scale revenue) will help guide reflection as well.